Claude Code is a powerful CLI tool that can be configured to work with multiple AI providers beyond Anthropic’s default endpoints. In this comprehensive guide, you’ll learn how to set up Claude Code configuration with three popular AI providers: Kimi K2, DeepSeek, and GLM, all while using Windows Subsystem for Linux (WSL).

Prerequisites

- Windows 10/11 with WSL installed

- Claude Code CLI installed

- API tokens for Kimi K2, DeepSeek, and/or GLM

- Basic familiarity with bash commands

Why Use Multiple AI Providers with Claude Code?

Different AI providers offer unique advantages:

- Kimi K2: Excellent for Chinese language processing and local deployment options

- DeepSeek: Strong performance in coding tasks and mathematical reasoning

- GLM: Optimized for conversational AI and general-purpose tasks

Step 1: Create Environment Files for Each AI Provider

First, we’ll create separate environment files for each AI provider to store their API configurations securely.

Creating the Kimi K2 Environment File

Create the ~/.claude-kimi-env file:

export ANTHROPIC_BASE_URL="https://api.moonshot.cn/v1"

export ANTHROPIC_AUTH_TOKEN="your_kimi_token_here"Creating the DeepSeek Environment File

Create the ~/.claude-deepseek-env file:

export ANTHROPIC_BASE_URL="https://api.deepseek.com/v1"

export ANTHROPIC_AUTH_TOKEN="your_deepseek_token_here"Creating the GLM Environment File

Create the ~/.claude-glm-env file:

export ANTHROPIC_BASE_URL="https://api.z.ai/api/anthropic"

export ANTHROPIC_AUTH_TOKEN="your_glm_token_here"Step 2: Set Up Convenient Aliases

To make switching between AI providers seamless, we’ll create bash aliases that automatically load the correct environment and launch Claude Code.

Create or edit the ~/.bash_aliases file and add the following aliases:

# Custom aliases

# Claude GLM alias

alias claude-glm='source ~/.claude-glm-env && claude --dangerously-skip-permissions'

# Claude Kimi alias

alias claude-kimi='source ~/.claude-kimi-env && claude --dangerously-skip-permissions'

# Claude DeepSeek alias

alias claude-deepseek='source ~/.claude-deepseek-env && claude --dangerously-skip-permissions'

# Add more aliases below this lineStep 3: Ensure Aliases Load Automatically in WSL

For the aliases to work every time you start WSL, verify that your ~/.bashrc file includes the

following lines (they should be there by default):

if [ -f ~/.bash_aliases ]; then

. ~/.bash_aliases

fiStep 4: Apply the Configuration

To use the new aliases immediately without restarting WSL, run:

source ~/.bash_aliasesHow to Use Your New Claude Code Setup

Now you can easily switch between AI providers using simple commands:

claude-kimi– Launch Claude Code with Kimi K2claude-deepseek– Launch Claude Code with DeepSeekclaude-glm– Launch Claude Code with GLM

Security Best Practices

Important Security Tips:

- Never commit environment files to version control

- Use strong, unique API tokens for each provider

- Regularly rotate your API keys

- Set appropriate file permissions:

chmod 600 ~/.claude-*-env

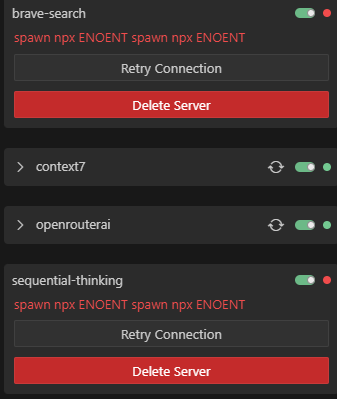

Troubleshooting Common Issues

Aliases Not Working

If your aliases aren’t working after starting WSL:

- Check if

~/.bash_aliasesexists - Verify

~/.bashrcsources the aliases file - Run

source ~/.bashrcto reload the configuration

API Connection Issues

If you encounter API connection problems:

- Verify your API tokens are correct

- Check if the API endpoints are accessible from your network

- Ensure the base URLs are properly formatted

Advanced Configuration Tips

Adding Model-Specific Parameters

You can extend your environment files to include model-specific parameters:

export ANTHROPIC_BASE_URL="https://api.deepseek.com/v1"

export ANTHROPIC_AUTH_TOKEN="your_token"

export ANTHROPIC_MODEL="deepseek-chat"Creating Project-Specific Configurations

For different projects, you might want different AI providers. Consider creating project-specific environment files and aliases.

Conclusion

You’ve successfully configured Claude Code to work with multiple AI providers on Windows WSL. This setup gives you the flexibility to choose the best AI provider for each task while maintaining a consistent development workflow.

The combination of environment files and bash aliases provides a clean, secure, and efficient way to manage multiple AI provider configurations. Whether you’re working with Kimi K2’s Chinese language capabilities, DeepSeek’s coding expertise, or GLM’s conversational strengths, you can now switch between them effortlessly.